Accuracy-Precision-Recall

In Machine learning classification Accuracy is a simple metric measuring how many cases the model classified correctly divided by the number of cases.

Accuracy = Number of cases predicted correctly / Total number of Cases.

If all the predictions are correct then the accuracy score will be 1

If all the predictions are wrong the accuracy score will be 0

Is Accuracy a good metric to measure the efficiency of a Classification model ?

The answer is No. Why ?

Assume that for a sample size of 100 credit card transactions one transaction will be fraudulent. That is 1% of all the credit card transaction will be fraudulent. A classification model that blindly labels every transaction as genuine is 99% accurate in this case. It hides false positives and false negatives.

Confusion matrix.

First we need to understand the four terms : True Positive , True negative , False positive , false Negative.

True positive(TP) : Cases when classifier predicted true (Fraudulent transaction) and the correct class was also true(Actually fraudulent transaction).

True negative(TN): Cases when classifier predicted as False(Genuine transaction) and the correct class was also false(Genuine transaction).

False Positive(FP): Cases when classifier predicted true (Fraudulent transaction) but the correct class was false(Genuine transaction) . This is called Type 1 error.

False Negative(FN) : Cases when classifier predicted as False (Genuine transaction) but the correct class was true (Fraudulent transaction). This is called Type 2 error.

Type 2 errors are usually more serious than Type 1 errors. Having false negatives allows fraudulent transactions to take place, defeating the very purpose of a fraudulent detection system.

This kind of error can be avoided by evaluating the model's precision and recall .

Precision checks out of all cases detected as fraudulent how many were actually fraudulent transactions.

Precision = TP / Total true prediction. = TP / (TP + FP)

Recall checks out of all fraudulent transactions how many was actually detected as fraudulent

Recall = TP / Actual all fraudulent transactions = TP / (TP + FN)

Precision is about correctness and Recall is about completeness. To get a good classification model, it should be balanced

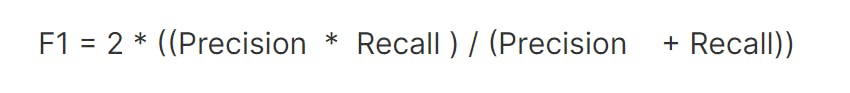

The F1-score combines precision and recall of a classifier into one metric by taking their harmonic mean